Another eventful month going places, we attended different cities and events, and John was on stage giving hot tips on becoming a Supercommunicator.

The highlight was the special print edition of Outperform, Eppo’s magazine, which we were lucky enough to receive. To celebrate one year of the hub that gathers brilliant minds in Experimentation, Ryan Lucht and the Eppo team curated their favorite pieces in a book.

Outperform’s content ranges from culture and news to statistics and software engineering. We highly recommend it and will share more materials in future editions, as it is state-of-the-art for anyone in Experimentation and Product.

Here are some notes from our top #1 piece (yet).

4 principles for making Experimentation count

By Lindsay M. Pettingill, shared by Eppo in Outperform

This is a timeless piece for anyone who experiments in their work, and should be reviewed from time to time to keep ourselves accountable in our role.

Lindsay, at the time of writing this piece, was a data scientist at Airbnb for over two years and supported the growth of experimentation from about 100 experiments a week to over 700 experiments/week. These are the four principles that supported the work in making impactful changes on the website.

1. Product experimentation should be hypothesis-driven

As Marina mentioned in the video, she's always been requested by my colleagues to provide a strong and data-backed hypothesis for any test idea. The question she hears the most at work is “This is a great idea, but what is supporting this theory?”.

Lindsay, in this section, reminds us that without a hypothesis, “we’re untethered, easily distracted by what appear to be positive results, but could well be statistical flukes”.

This reminds us of the first untethered space walk made by Dale Gardner in 1984 to fetch a satellite: we walk in the middle of nowhere, hoping that our change will bring a good result, but we don't have anything protecting us from getting lost in the loose ends of information. The satellite was fetched, but finding information in a sea of data is potentially harder than walking on space.

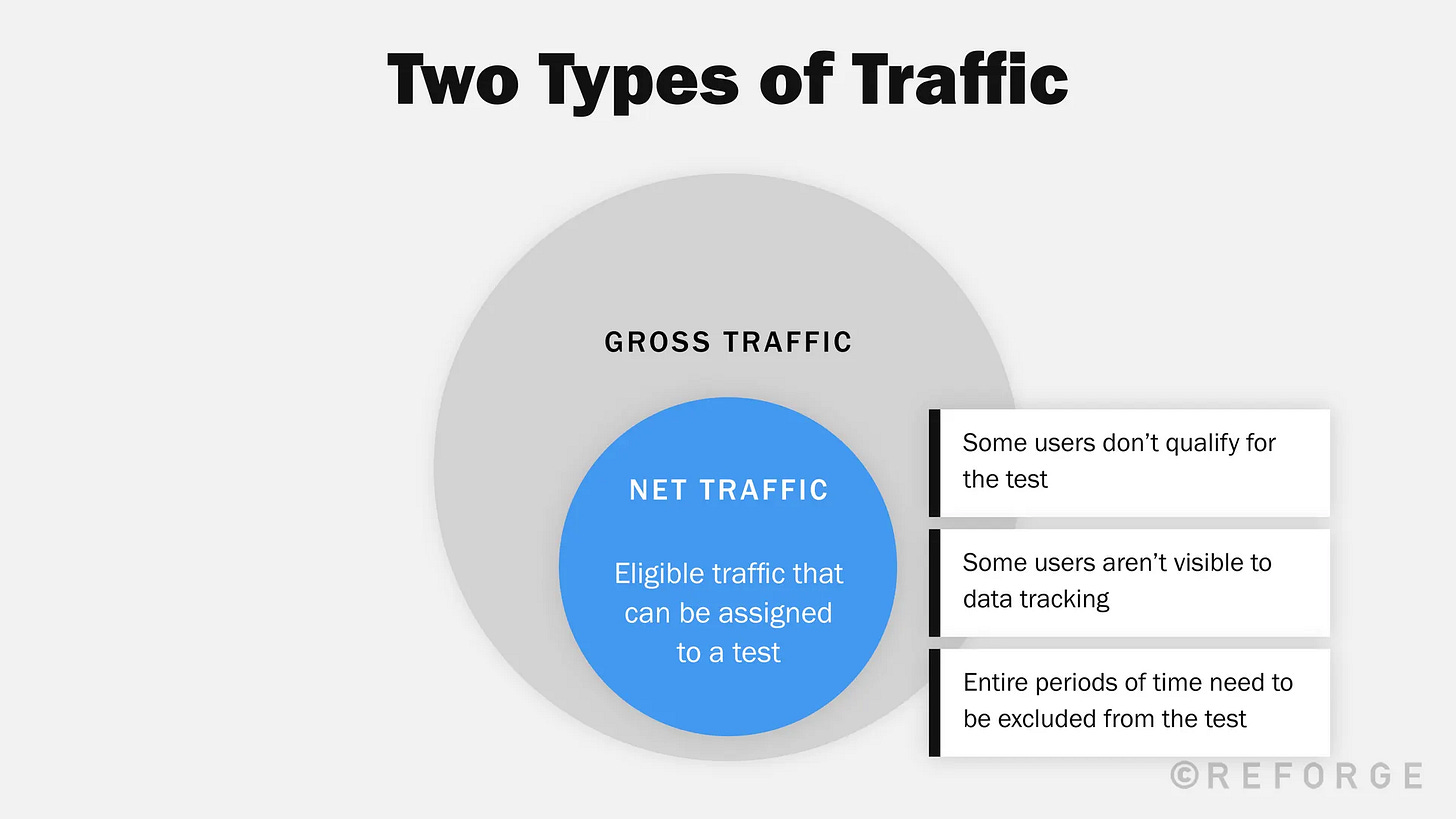

2. Defining the proper “exposed population” is paramount

An experiment is nothing without the right people seeing the right change, at the perfect time. And, as we all know, this is not an easy part of our job.

To find the right people, Lindsay’s first question to engineers is “When does exposure happen and how is it determined?”, and she won’t stop talking about it until they’re 100% certain of the answer.

You can make sure the right people are seeing your experiment by implementing sanity metrics to your experiments. For example, if you have people sign up for a test that is focused on logged-in users, it’s a serious sign that the wrong people are seeing the test, so your results will be harmed. This is the time to stop and re-think the execution.

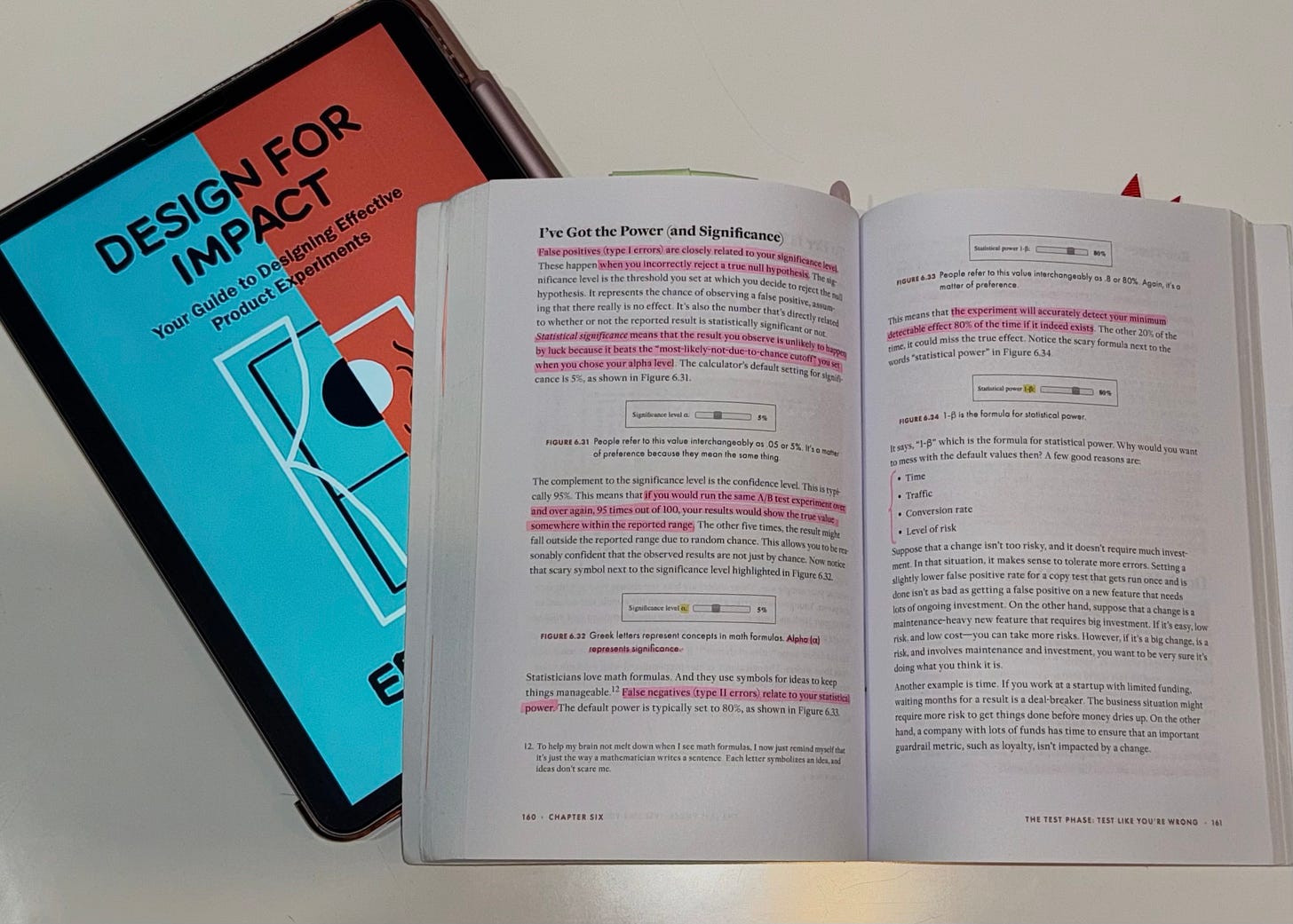

3. Understanding power is essential

Power is more than the formula Erin Weigel teaches in her book. Without it, none of us would be able to find out the potential effects of an experiment (as you probably know).

Lindsay’s suggestions to focus on power are:

Get a sense of the base rates with historical data of a page before starting an experiment.

Go big or go home: don’t launch experiments if you don’t think they’re strong enough to make a change.

If you don’t have power to learn about a potential change, focus on other methods of research to understand your user better.

As John says in the video, an easy way to find out is by using a trustworthy pre-test calculator — and we still often use the OG CXL AB test calculator.

4. Failure is an opportunity: use it

Experiments do not fail - hypotheses are proven wrong.

If you only focus on “successful experiments”, i.e. the ones that move the metrics up, you are missing tons of insights from “failed” tests.

As Morgan Housel says, “Read fewer forecasts and more history. Study more failures and fewer successes.” We all know it’s easy to learn why a test was successful and how to iterate and win from previous victories, but learning from “failures” gives you the golden answer to “what does NOT work for my customers?”

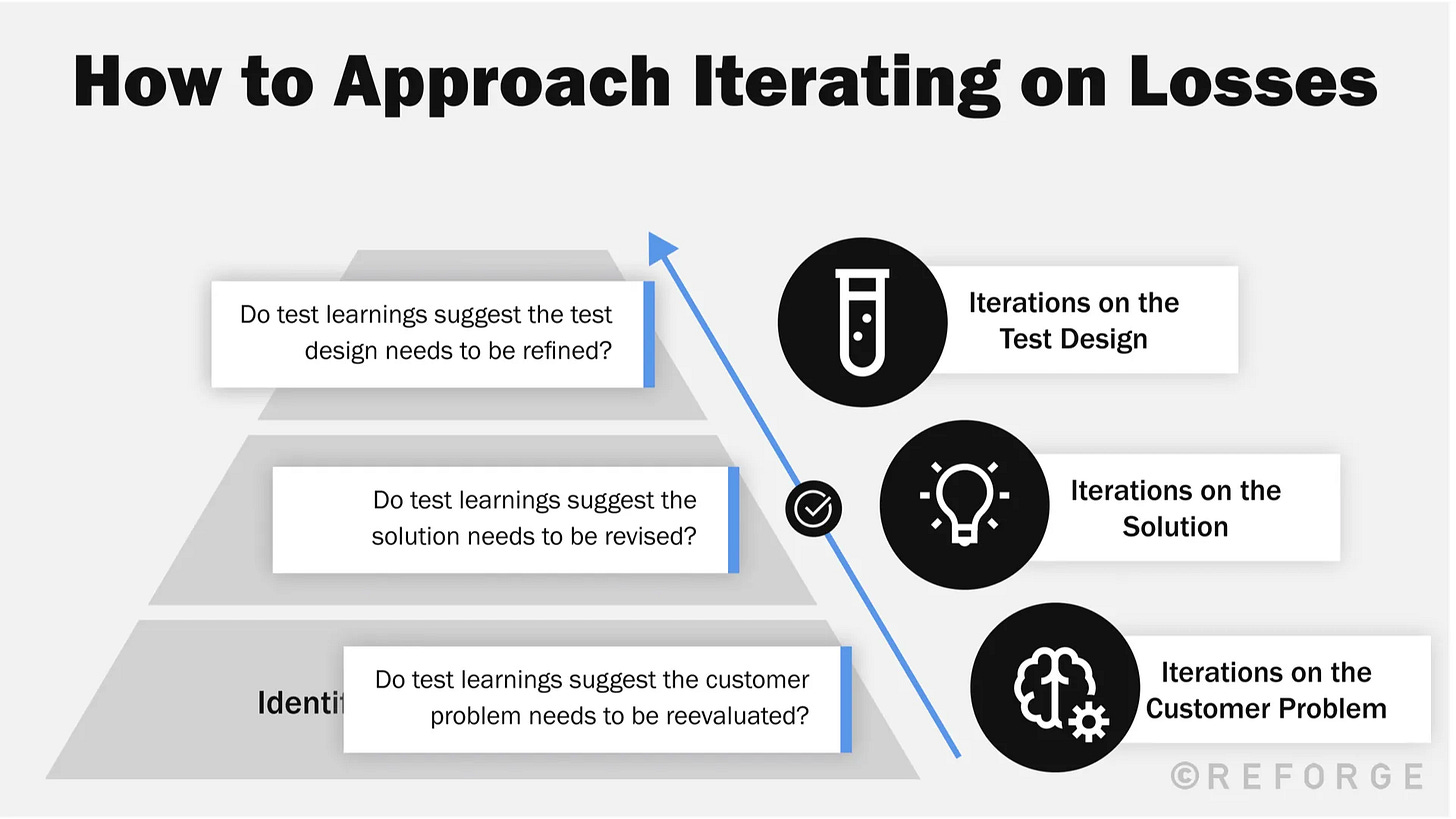

Lindsay’s recommendation to dealing with failure is asking yourself:

Was the hypothesis wrong, or was the implementation/execution of the hypothesis flawed?

Are the metrics moving together in the ways that I would expect?

Am I testing bold enough hypotheses?

The key point is that an experiment that doesn’t move the needle doesn’t necessarily mean the theory is wrong.

Conclusion

Lindsay reminds us that Experimentation is hard work, and a sophisticated tool doesn’t make much difference if we don’t put the effort ourselves.

That’s how we can shape and move businesses we believe in!

Don’t miss

Around the UK this month? Get your tickets for BrightonSEO on October 23rd/24th and we’ll see each other by the beach!

John and Marina will be covering the ultimate event for anyone wanting to immerse themselves in talks from the leaders in both Search, Content, and Influencer Marketing.

This time, we are partnering with Ellie Hughes and the Eclipse Group to capture memories and brilliant minds.

Up next

Marina will travel to London to dog-sit for our friend David Sanchez, watch Hamilton on Broadway with John, and head to Brighton for BrightonSEO.

John will be all over again during October, first in London and Brighton, then in Cyprus for the first eToro ($ETOR) Popular Investor conference after the company went public. By the end of the month, Turkey to explore the secrets of Constantinople, the old capital of the Byzantine empire.